I was looking for something that gave a lot of freedom for the artists, that was fast and that allowed that the same algorithm could be used in both game and editor. The last point was especially important since we had much success with our WYSIWYG-editor for Amnesia, and we did not want terrain to break this by requiring some complicated creation process.

Even once I started working on the textures, I was unsure on the exact approach to take. I had at least decided to use some form of texture splatting as the base. However there is a lot of ways to go about this, the two major directions being to either do it all in real-time or to rendering to cache textures in some manner.

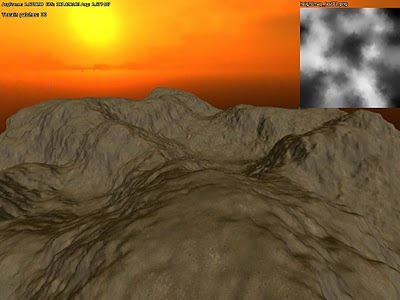

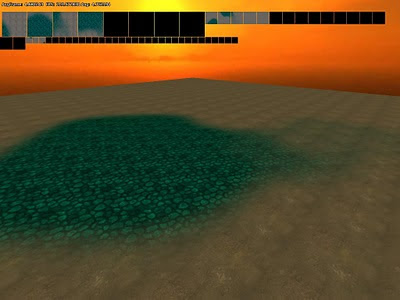

Before doing any proper work on the texturing algorithm I wanted to see how the texturing looked on some test terrain. In the image below I am simply project a tiling texture along the y-axis.

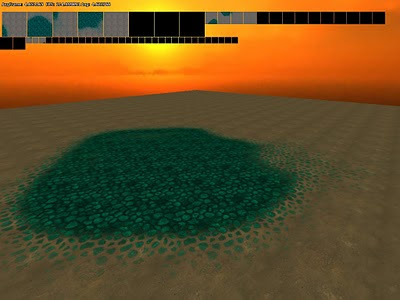

Although I had checked other games, I was not sure how good this the y-axis projection would look. What I was worried of was that there would be a lot of stretching at slopes. It turned out that it was not that bad though and the worst case looks something like this:

While visible it was not as bad as I first thought it would be. Seeing this made me more confident that I could project along the y-axis for all textures, something that allowed for the cached texture approach. If I did all blending in real-time I would have been able to have a special uv-mapping for slopes, but now that y-axis projection worked, this was no longer essential. However, before I could start on testing texture caching, I need to implement the blending.

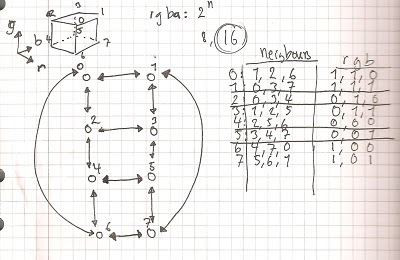

While visible it was not as bad as I first thought it would be. Seeing this made me more confident that I could project along the y-axis for all textures, something that allowed for the cached texture approach. If I did all blending in real-time I would have been able to have a special uv-mapping for slopes, but now that y-axis projection worked, this was no longer essential. However, before I could start on testing texture caching, I need to implement the blending.The plain-vanilla way to do is, is to have an alpha texture for each texture layer and then draw one texture layer after another. Instead of having many render passes, I wanted to do as much blending in a single draw call. By using a an RGBA texture for the alpha I could do a maximum of 4 at the same time. I first considered this, but then I saw a paper by Martin Mittring from Crytek called "Advanced virtual texture topics" where an interesting approach was suggested. By using an RGB texture up to 8 textures could be blended, by letting each corner of an rbg-cube be a texture. A problem with this approach is that each texture can only be nicely blended with 3 other corners (textures), restricting artists a bit. See below how texture layers are connected (a quick sketch by me):

Side note: Yes, it would be possible to use an RGBA texture with this technique and let the corners of a hyper cube represent all of the textures. This would allow each texture type to have 4 textures it could blend with and a maximum of 16 texture layers. However, it would make life quite hard for artists when having to think in 4D...

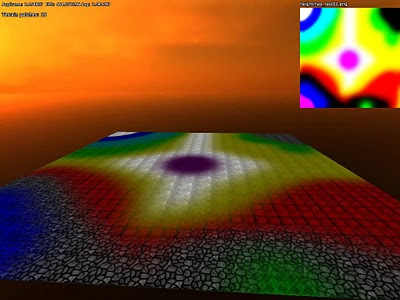

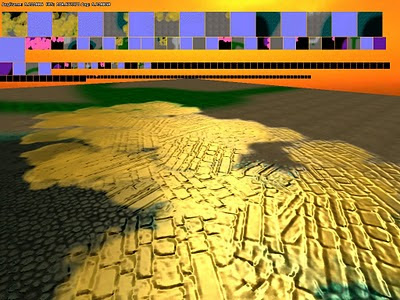

Side note: Yes, it would be possible to use an RGBA texture with this technique and let the corners of a hyper cube represent all of the textures. This would allow each texture type to have 4 textures it could blend with and a maximum of 16 texture layers. However, it would make life quite hard for artists when having to think in 4D...When implemented it looks like this (note he rgb texture in the upper right corner):

However, I got into a few problems with this approach, that I first thought where graphics card problems, but later turned out to be my fault. During this I switch to using several layers of RGBA textures instead, blending 4 textures at each pass. When I discovered that is was my own error (doh!), I had already decided on using cache textures (more on that in a jiffy), which put less focus on render speed of the blending. Also this approach seemed nicer for artists. So I decided on a pretty much plain-vanilla approach, meaning some work in vain, but perhaps I can have use for it later on instead.

Now for texture caching. This method basically works as the mega texture method using in Quake Wars and others. But instead of loading pieces of a gigantic texture at run-time, pieces of the gigantic texture is generated at run-time. To do this I have a several render textures in memory that are updated with the content depending on what is in view. Also, depending on the geometry LOD I use, I vary the texture resolution rendered to and make it cover a larger area. So texture close to the view use large textures and far away have much lower.

I first thought had to do some special fading between the levels and was a bit concerned on how to do this. However, it turned out that this was taken care of the trilinear texture filtering quite nicely (especially when generating mipmaps for each rendered texture). When implemented the algorithm proved very fast as the texture does not have to be updated very often and I got very high levels of detail in the terrain.

Side note: The algorithm is actually used in Halo Wars and is mentioned in a nice lecture that you can see here. Seeing this also made me confident that it was a viable approach.

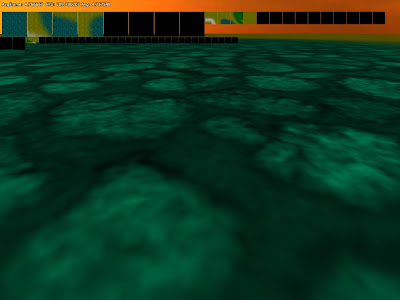

The algorithm was not without problems though, for example the filtering between patches (different texture caches) created seams, as can be seen below:

The way I fixed this was simply to let each texture have a border that mimicked all of the surrounding textures. While the idea was simple, it was actually non-trivial to implement. For example, I started out with a 1 pixel border, but had to have a 8 pixel border for the highest 1024x1024 textures to be able to shrink it. Anyhow, I did get it working, making it look like this:

(Again, click image to see full size!)

(Again, click image to see full size!)Next up was improving the blending. The normal blending for texture splatting can be quite boring and instead of just using a linear blend I wanted to spice it up a bit. I found a very nice technique for this on Max McGuire's blog, which you can see here. Basically each material gets an alpha that determines how fast each part of it fades. The algorithm I ended up with was a bit different from the one outlined in Max's blog and looks like this:

final_alpha = clamp( (dissolve_alpha- (1.0 - blend_alpha ) / (dissolve_alpha * (1-fade_start), 0.0, 1.0);

Where final_alpha is used to blend the color for a texture and fade_start determines at which alpha value the fade starts (this allows the texture to disappear piece by piece). blend_alpha is gotten from the blend texture, and dissolve_alpha is in the texture, telling when parts of the texture fades out.

So instead of having to have blending like this:

It can look like this:

Now next step for me was to allow just not diffuse textures, but also normal mapping and specular. This was done by simply rendering to more render targets, so each type had a separate texture. This would not have been possible to do if I had blended in real-time as I would have reached the normal limit of 16 texture limits quite fast. But now I rendered them separately, and when rendering the final real-time texture I only need to use a texture for each type (taken from the cache textures). Here is how all this combined look:

Now for a final thing. Since the texture cached are not rendered very often I can do quite a lot of heavy stuff in them. And one thing I was sure we needed was decals. What I did was simply to render a lot of quads to the textures which are blended with the existing texture. This can be used to add all sorts of extra detail to map and almost require no extra power. Here is an example:

I am pretty happy with these features for now although there are some stuff to add. One thing I need to do is some kind of real-time conversion to DXT texture for the caches. This would save quite a lot of memory (4 - 8 times less would be used by terrain) and this would also speed up rendering. Another thing I want to investigate is to add shadows, SSAO and other effects when rendering each cache texture. Added to this are also some bad visual popping when levels are changed (this only happens when zooming out a steep angle though) that I probably need to fix later on.

Now my next task will be to add generated undergrowth! So expect to see some swaying grass in the next tech feature!

No comments:

Post a Comment